Laterite is a data, research, and technical advisory firm based in Rwanda and Ethiopia. We spoke with the Laterite team about their recent work with TechnoServe’s East Africa Coffee Initiative as part of their new embedded advisor model.

Tell us about your work on TechnoServe’s East Africa Coffee Initiative.

What is the project? What is Laterite’s role? Can you expand on how your new service line, the embedded research advisor, works?

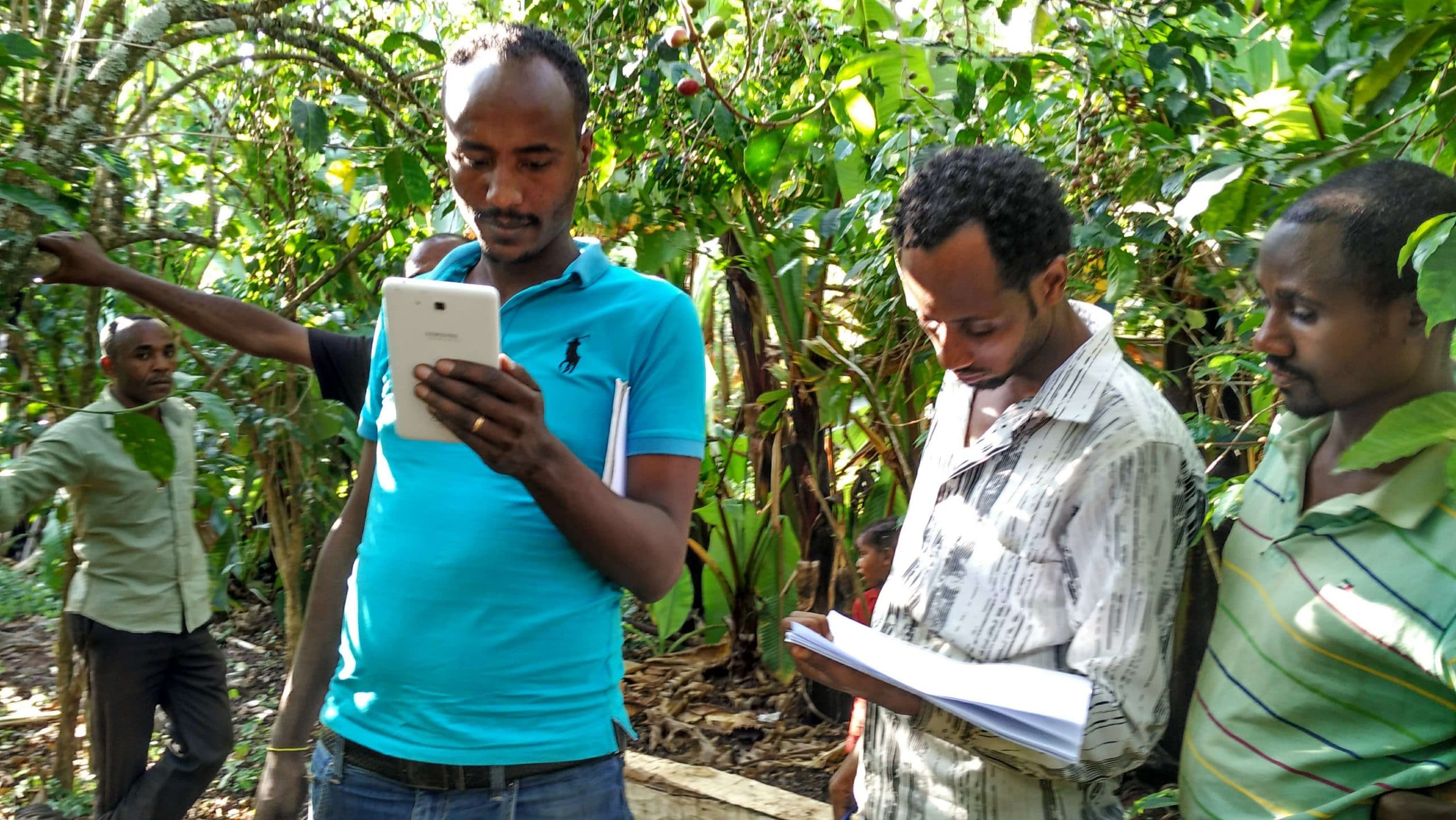

Laterite has been working with TechnoServe’s East Africa Coffee Initiative since 2013. (TechnoServe is an international non-profit that specializes in private sector approaches to development challenges; they work extensively with smallholder farmers and agricultural value chains.) The program provides agronomy and business training to smallholder coffee farmers across Ethiopia, Kenya, Rwanda and South Sudan. The program also works on other aspects of the coffee value-chain such as improving the sustainability of coffee processing mills, strengthening cooperatives and improving financial access.

Last year, Laterite entered a long-term engagement with TechnoServe’s East Africa Coffee Initiative to provide embedded and independent research and evaluation services to their operations in Ethiopia, Kenya, South Sudan and Rwanda.

This model of engagement – embedded yet external research services – is unique. Laterite has researchers situated directly within the Coffee East Africa team with the objective of ensuring a greater harmony between project implementation and research – and at the same time drawing on the independence and the analytic and operational capabilities of Laterite’s broader team of experts. To carry out this work, Laterite has established a Project Office in Ethiopia and works closely with TechnoServe’s 4 country teams across East Africa to:

- Continue to develop the programme’s overarching M&E and impact evaluation strategy

- Oversee the implementation of ongoing data collection efforts across four countries

- Conduct all analytic work and ensure that donor reporting requirements are met

- Build greater opportunities for learning into TechnoServe’s M&E systems.

What are some of the things you have been able to achieve using the embedded advisor model in TechnoServe’s East Africa Coffee Program?

Laterite has introduced a variety of research methods and designed a number of tools to measure the impact of TechnoServe’s agronomy training program and learn about its farmers. These include: cluster randomized control trials, stratifying farmers by attendance in the program, coffee cooperative or regions, and placing additional emphasis on household poverty assessments, nutrition, gender, youth and social network analysis.

“Building a close relationship with TechnoServe’s M&E and implementation teams was crucial to the success of these initiatives.”

Some of our recent achievements include:

- Helped improve data collection, data audit and data analysis processes within TechnoServe – including migrating all the country evaluation data collection platforms to SurveyCTO!

- Conducted a technical review of research design methodologies and sampling given research objectives and budget constraints

- Worked with agronomy experts to gain insights into different profiles of coffee-farming households and improving measurement of best practice adoption

- Introduced several new and interesting socio-economic modules to understand the profile of participant farmers

- Capacity building within TechnoServe’s M&E teams including extensive training on data analysis using STATA and survey creation on SurveyCTO.

- Future plans include design of mini-research experiments to understand and improve implementation of the agronomy training program.

Building a close relationship with TechnoServe’s M&E and implementation teams was crucial to the success of these initiatives. Being “embedded” allowed for close, frequent interactions across teams and for Laterite to develop an in-depth understanding of the organization’s processes and procedures. The embedded yet independent model allowed the Laterite research team to make pointed, specific recommendations for improving the organization’s learning function.

Where are you in the project now? Are there any interesting findings you can share? What are your next steps?

We have successfully completed nearly 20 surveys across Ethiopia, Kenya, Rwanda and South Sudan in the last year using SurveyCTO. Our surveys have encompassed measurement of agronomy best practices, coffee yield and modules on understanding socio-economic and demographic characteristics of participating coffee farmers. In addition, we are regularly monitoring implementation progress through attendance scorecards for each of the agronomy programs. This is used by the program team to monitor how well the training is being implemented.

We also make a conscious effort to present findings from our research and evaluation activities to the implementation teams. We hope that this helps them better understand the farmers they work with and know what is working and what is not working with their program implementation.

Recently, we’ve expanded our work with TechnoServe and are now also supporting the research work for their sustainability projects that conduct independent audits on coffee processing mills in Ethiopia and Kenya.

In terms of next steps, we will be collaborating with TechnoServe to jointly seek funding for innovative studies such as understanding drivers of adoption of best practices, mapping and understanding knowledge diffusion within farmer social networks, etc. We hope these studies will help form the basis for piloting and testing new approaches to improving best practice adoption of coffee agronomy.

Tell us about some of the challenges you’ve encountered in the field.

It has been extremely challenging to work across such diverse contexts within tight timeframes. For example, the training program was suspended in South Sudan after the security situation in the country deteriorated in 2016. That meant our M&E activities had to be limited to analysing past years’ data. We have also faced multiple communication blocks in Ethiopia throughout the year due to protests followed by a nationwide state of emergency, which has resulted in delays in field surveys and our research. In Kenya, the 2017 election hindered regular activities for a few months and, in fact, caused a drop in farmer attendance in the training program.

We’ve had to be creative to continue our research and SurveyCTO has been very supportive of helping us overcome some of these challenges. Access to the SurveyCTO website and server was blocked multiple times in Ethiopia and a great feature has been offline data transfer from tablets to computer, offline validation and uploading of surveys to all tablets. In 2016, when a survey was paused midway due to security issues and mobile internet was non-existent in those areas, the only way to recover all the completed surveys in reasonable time was to have the survey team travel all the way from Western Ethiopia to the Addis Ababa office. Thanks to guidance from the SurveyCTO team, we created a mobile hotspot and conducted an offline data transfer from tablets to a computer. In the end, hundreds of surveys (along with crucial photos) from all tablets were transferred successfully.

In May 2017, on the verge of a survey launch, we faced a complete internet block in Ethiopia. Again, we solved problems on the fly and managed to launch the survey as per schedule. Our survey supervisor had to bring all tablets to the Addis Ababa office (a day’s journey) to get the survey and travel back to the field to launch the survey as per schedule. We still managed to load all the tablets with the survey using SurveyCTO Sync’s offline validation and transfer functionality.

What are some of the innovative/interesting ways you’re using SurveyCTO?

While we are experimenting with highly complex functionalities of SurveyCTO at Laterite for internal purposes, in this project we have spent most of our time improving existing systems within TechnoServe. Some interesting examples are:

- Migrating all evaluation data collection to the SurveyCTO platform at TechnoServe’s Coffee program – some from paper surveys and some from other data collection platforms. Having built capacity within teams about the various features of SurveyCTO, it is now widely accepted by local M&E staff as the best data collection software for program evaluation they have used till now!

- Embedding images within our survey to show examples of coffee diseases, whereby previously enumerators would carry large laminated photos, with frequent occurrences of them not using them or losing them.

- Requiring photo evidence throughout the survey to greatly improve the quality of data on Best Practice adoption. For example, in Kenya, the TechnoServe agronomy expert was able to review the photos for “composting best practice” from the field and realized that manure piles were being misclassified as compost-making sites! This resulted in immediate feedback to the data collection team to revisit how to better identify compost-making in coffee farms.

- Using Google sheets integration and online data explorer has dramatically improved monitoring of surveys. As part of our capacity building exercise, we have trained M&E teams to create and track progress on a Google sheets dashboard, catch suspicious outliers on individual questions, compare progress with daily field reports and provide detailed feedback in a much better and quicker way.

- Using the text audit function to record the duration of each question. We use this to run audits on the incoming data at multiple points during the survey and identify all the problematic data collectors (high median time per survey, moving through questions too fast, spending unusually low or high time on individual questions).

- Reviewing text audits collected from field testing and piloting to help us gauge the length of individual modules and the overall survey. This is an important decision factor while adding new modules and deleting unnecessary questions. With this data, we could make a case to include an experimental module to measure social networks within the Rwandan coffee farming community.

- Features such as incomplete submission and re-submission of sent surveys from tablets to ensure the progress as per field logs matches the server data.

- Using the offline data transfer capabilities to transfer completed surveys to a computer in remote locations or during times where government has limited internet access in the country.

Is there anything else you’d like to share about the project or survey design in general? Any advice for others?

We strongly believe that the goal of M&E is not only for donor reporting. Rather, M&E presents an opportunity to learn about the beneficiaries and to learn about implementation in a manner that can feed back into continuous program improvements. We are keen to explore the idea of building mini-experiments into the monitoring process to better utilize existing data collection activities and create a quicker feedback loop between research and implementation.

“M&E presents an opportunity to learn about the beneficiaries and to learn about implementation in a manner that can feed back into continuous program improvements.”

Another thought on design that we think is important to consider is that while RCTs are widely accepted as the “gold standard” in measuring program impact, we find often that they are not the best option for our clients. One of the main drawbacks is that with an RCT, research design and program implementation must be carried out in perfect harmony. The ability to measure impact through an RCT is highly sensitive to any deviation from the planned methodology – which on the field can happen often and unexpectedly. RCTs can be very expensive, results don’t come until a program is complete, and the ability to derive meaningful results can easily be compromised by changes on the field. While we fully agree there is an important place for RCTs in development research, we find that our clients could get better value for money in terms of their learning objectives by leveraging and improving their current M&E systems.

In a number of TechnoServe’s projects, we have recommended more efficient use of M&E resources to explore alternative methods (e.g. quasi or non-experimental methods) to conduct inference or focus on learning more about the implementation and the direct beneficiaries of TechnoServe’s training program. Given the resources an organization has, a critical question to ask is – what is the research set-up that will help us demonstrate impact AND maximize learning? If a typical evaluation set-up leads to more assumptions and caveats than results, then we recommend focusing on alternate impact evaluation strategies which can not only result in greater learning about the program and beneficiaries, but also savings that can be re-invested in greater exploration.